Public statement on blocking QOTO.org from Jubilife Global Terminal

QOTO has a moderation philosophy that is incompatible with how I run Jubilife, as they do not suspend any instances (see their instance short description in the sidebar which includes “We federate with all servers: we don’t block any servers”. Even the “silence” level block is utilized very sparingly; only 4 instances are silenced from QOTO as of this writing. Freeman himself interacts with instances and individuals who we have near-zero tolerance for, as a quick scan of his profile while responding to his email showed boosts from gleasonator.com, gameliberty.club, and shitposter.club. The last of these, SPC, has been a fashy hellhole since before Mastodon existed. There is no excuse for an admin of an instance that has been around since 2018 to be boosting a goddamn thing from SPC. Jubilife and our fellow travelers in the fediverse give no quarter for fascists, and if you do then you are not welcome in our communities.

Freeman’s email and my response are embedded in the gist below:

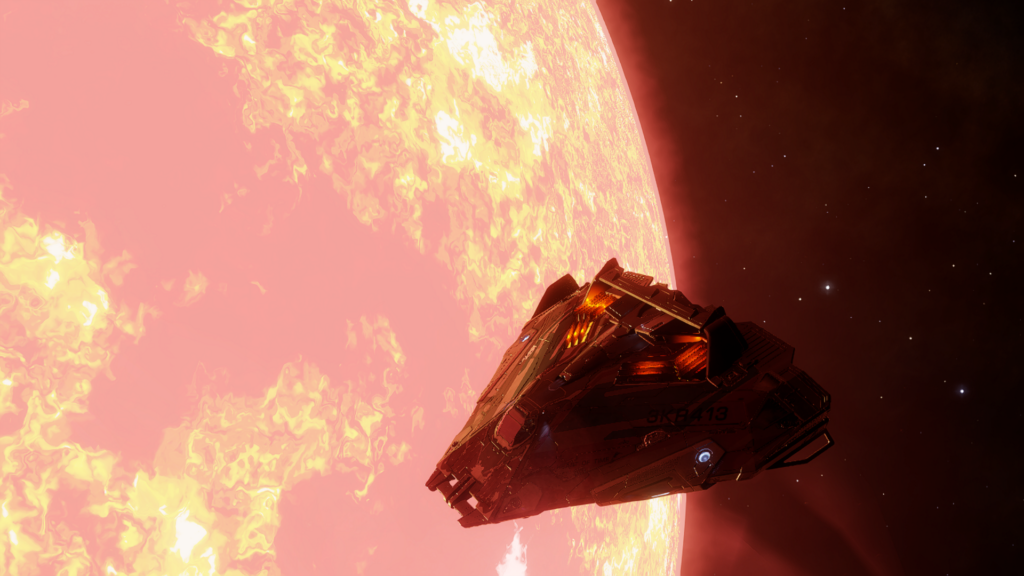

Nova Elys’ Logbook, 7 November 3308: First Hutton Run

Got my commemorative mug, availed myself of the station’s bar, and loaded up my hold with several tons of that centauri gin people like for some stars-damned reason. Then before I took the high wake highway home I did a little tourism of what few sights there are to see out by Proxima, namely Eden and the red dwarf itself.

Now I’m back at Whitson in my hab after offloading that booze, got the Heart in the shop, and ready to get some shuteye. Tomorrow I need to take the Overcoat back out to Opala to finish a salvage job, maybe this time I can actually get a bead on the signal for those samples…

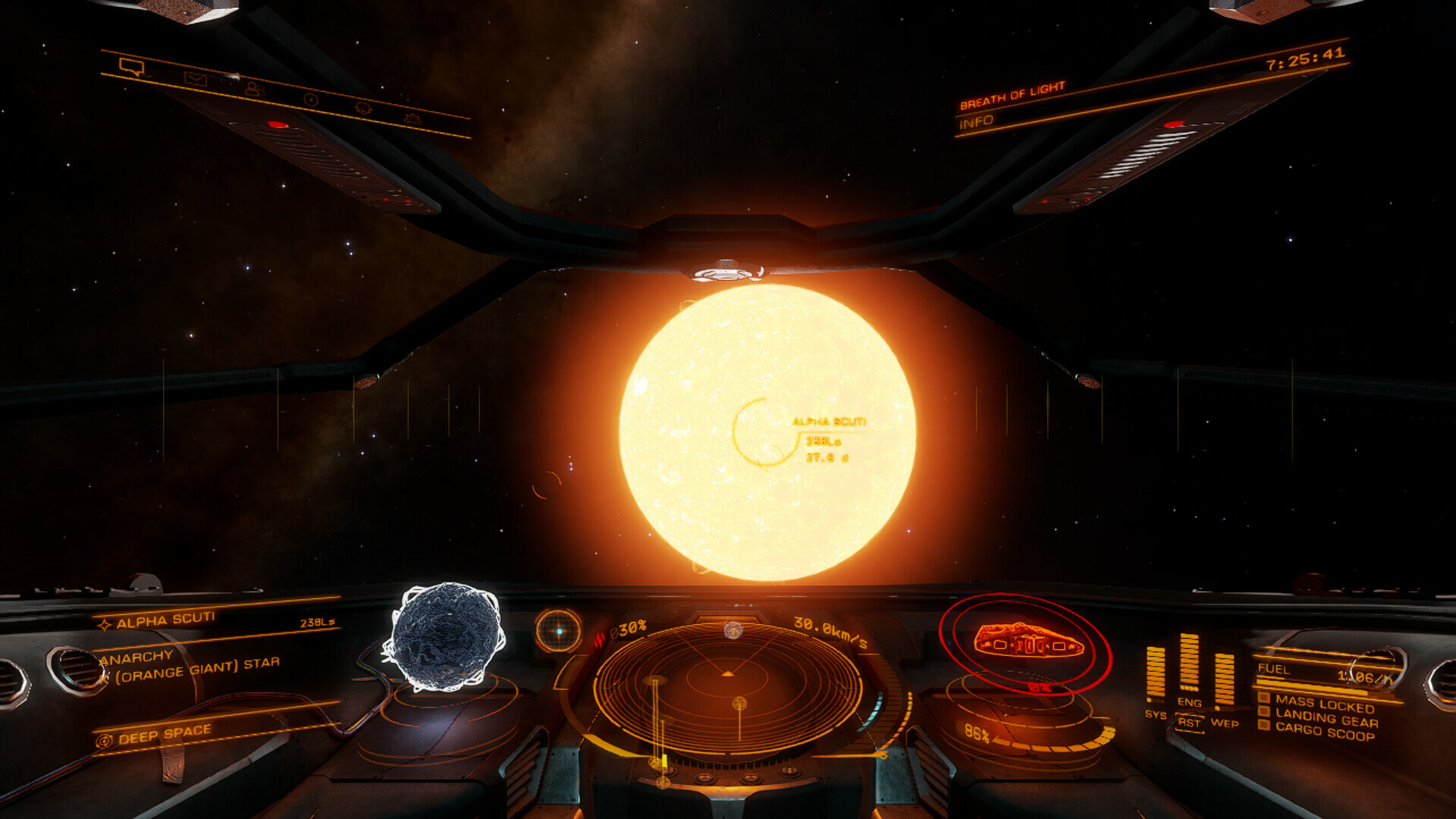

Nova Elys’ logbook, 4 November 3308

Took a trip out to Alpha Scuti as a bit to razz a friend about their freckles looking like Scutum. It was worth it for the data to sell to Universal Cartographics but gotdamn I probably should’ve just taken the Heart of Time instead of turning the old Sidewinder into a flying gascan; why in the hell did I do that‽

New website

podfeels Demo 1: godfeels 2.1 ch 5

Our first demo, June’s coming out scene (i.e. chapter 5 of godfeels 2, part 1: bittersweet june), was released on Monday: https://drive.google.com/file/d/1KtMRHGzaWS-hcDLyAxN3TfZR3zCkPEQz/view

Response to You’re Wrong About’s Cancel Culture episode

Originally posted on 9 June 2021 on the Community section of You’re Wrong About’s Patreon.

I really hate to post something like this, but I am… disappointed with the cancel culture episode. It is, in my view, mostly a good episode, but the repeated mentions of Natalie Wynn in a sympathetic light without mentioning what she was “cancelled” for is quite irresponsible.

Wynn repeatedly made statements in videos and on Twitter that were bigoted toward nonbinary and non-passing trans people. Some of these were apologized for before a couple months later she’d say the same kind of crap again. It finally came to a head in summer or fall of 2019 when she made a thread against the practice of asking for pronouns (in which she also said she felt like the last of the “old school transsexuals” despite her having come out in like 2017), got called out again, apologized, deactivated her Twitter for a short time before coming back and putting out a new video with a cameo from Buck Angel (who she shouted out on Twitter when announcing the video). Buck Angel is a trans man famous as a porn star and infamous as a transmedicalist or “truscum”, i.e. the view that transness is a Medical Condition™ and that anyone who doesn’t experience gender dysphoria (or experiences it in a way that isn’t True Dysphoria™) isn’t really trans. Quite a lot of nastiness and gatekeeping comes from transmeds including broad anti-nonbinary bigotry. This was the final straw for many people because no matter how many times Wynn apologizes, those apologies ring hollow when she goes right back to the same old shit. Then she made the “Cancellation” video a few months later, leading to quite a bit of dogpiling of people who criticized her thanks to screenshots included in the video (at least some of which didn’t @mention her and even had her blocked) and has made an even bigger name for herself than she ever would have gotten thanks to the cancellation narrative.

Now, I don’t expect cis people to know all of this without doing some digging, but considering this was first and foremost a trans intracommunity issue, I do think that cis allies have a responsibility to do that due diligence. Please, please be better about this in the future.

My device name theme

Current local device names are Rustboro and Saffron for my phones, Oldale for my laptop, Littleroot for my older desktop, and Mossdeep for my new desktop. (Yes I have a bit of Hoenn favoritism, Ruby was the first game in the series I completed.)

October update: Rustboro, a Galaxy S4, has finally been retired. Driftveil, a Pixel 5a, has taken its place.

Well, here we are again. Third iteration of this blog.

Queer Etymology: Enby

There’s a lot of misinformation about various LGBT/Queer terms, especially when it comes to neologisms coined on social media where the origin may not be well-documented. For this post, I’m going to address the etymology of the word ‘enby’ and the misconceptions around it.

The context of this post is that within the last few months I have become aware of discussions around the term ‘enby’ and the initialism it is derived from: NB. Due to NB being an initialism also used in discussions of race to mean non-Black, some people of color have asked that people not use the initialism to mean nonbinary. A lot of people in these discussions have made the claim that the word ‘enby’ was coined by nonbinary people of color as an alternative to the initialism. This claim is false in all respects.

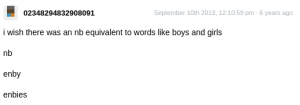

This September 2013 post, originally from Tumblr user revolutionator[note] is the origin of the term

i wish there was an nb equivalent to words like boys and girls

nb

enby

enbies

‘Enby’ is literally just intended as a nonbinary equivalent to ‘boy’ and ‘girl’, and the discussion of initialism collision with ‘non-Black’ came years later as far as I can tell. And as many nonbinary people have brought up, the term can be quite infantilizing[see 2021 addendum] and should not be used for people who don’t use it for themself.

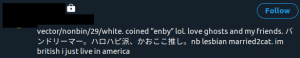

As for the other part of the false claim, that it was coined by a person of color, I’ll let this (partially redacted for privacy on a locked account) screenshot of the coiner’s Twitter profile say all that needs to be said about that:

Note: The link is to a Wayback archive of a reblog by Tumblr user faunmoss from 6 years ago as of this writing in 2019. The original post itself is on a blog that Vector last posted to in 2015, with a large string of numbers as the current username and behind the post-2018 NSFW filter, which breaks links to direct posts outside the mobile app. Reblogging to drafts from mobile and checking the timestamp with Xkit shows the OP was posted on 10 September 2013:

2021 Addendum: A couple years later, I’m not really happy with how I phrased this. While ‘enby’ can be infantilizing, it’s no more so than the words it was coined as an analogue of (i.e. ‘boy’ and ‘girl’).